Freeform Robot

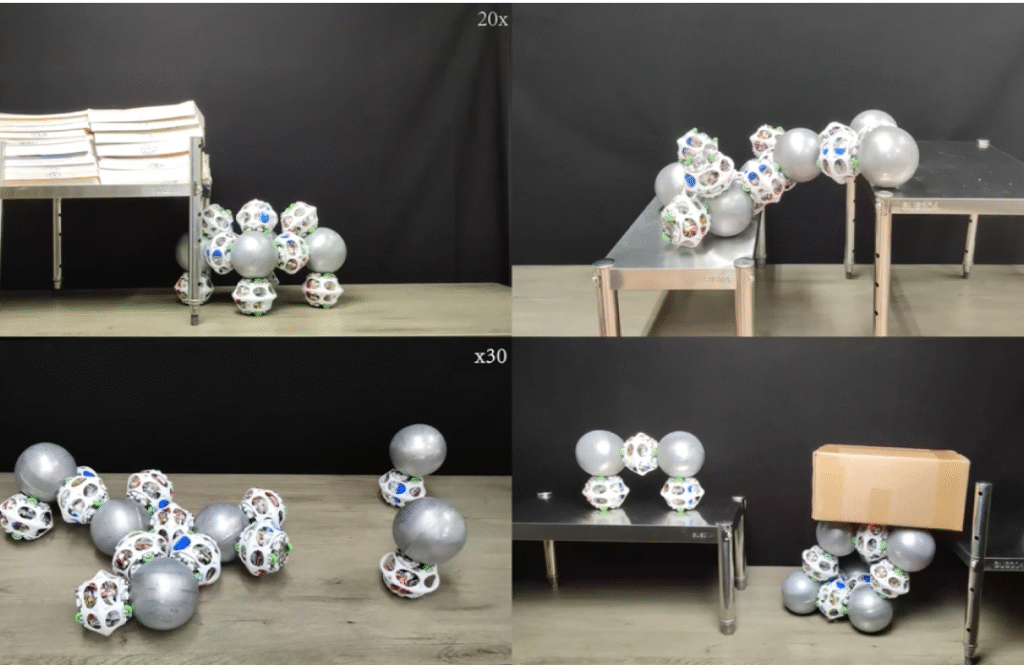

Modular self-reconfigurable robotic systems have strong self-adaptation and self-healing abilities, which makes them capable of coping with various tasks in complex and changeable environments. Most existing modular self-reconfigurable robots have connection constraints on the mechanical structure. Their relative positioning and motion planning capacities are also limited to the structured environment, which is different from the real and changing environment. To break these limitations, this project aims to develop key technologies for modular self-reconfiguration robots to be applied in unstructured environments. The research results can lay a theoretical and technical foundation for developing robotic swarms and field robots. They can also be widely used in search and rescue, space exploration, etc.

Metal Spheres Swarm Together to Create Freeform Modular Robots – IEEE Spectrum

Magnetic FreeBOT orbs work together to climb large obstacles – Engadget

- Yuxiao Tu, Guanqi Liang, Di Wu, Xinzhuo Li, Tin Lun Lam, “Locomotion and Self-reconfiguration Autonomy for Spherical Freeform Modular Robots,” International Journal of Robotics Research (IJRR), July, 2025. [paper] [video]

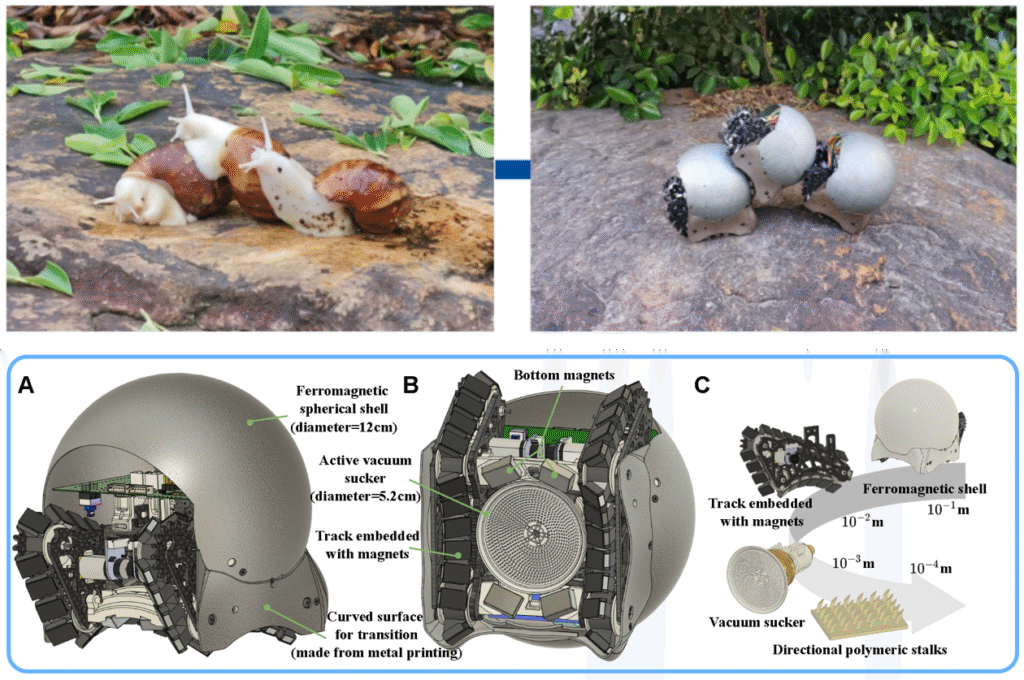

- Da Zhao, Haobo Luo, Yuxiao Tu, Chongxi Meng, Tin Lun Lam, “Snail-Inspired Robotic Swarms: A Hybrid Connector Drives Collective Adaptation in Unstructured Outdoor Environments,” Nature Communications, April 29, 2024. [paper] [video]

- Guanqi Liang, Haobo Luo, Ming Li, Huihuan Qian and Tin Lun Lam, “FreeBOT: A Freeform Modular Self-reconfigurable Robot with Arbitrary Connection Point – Design and Implementation,” Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA (Virtual), October 25-29, 2020. [paper] [video] [IROS Best Paper Award on Robot Mechanisms and Design]

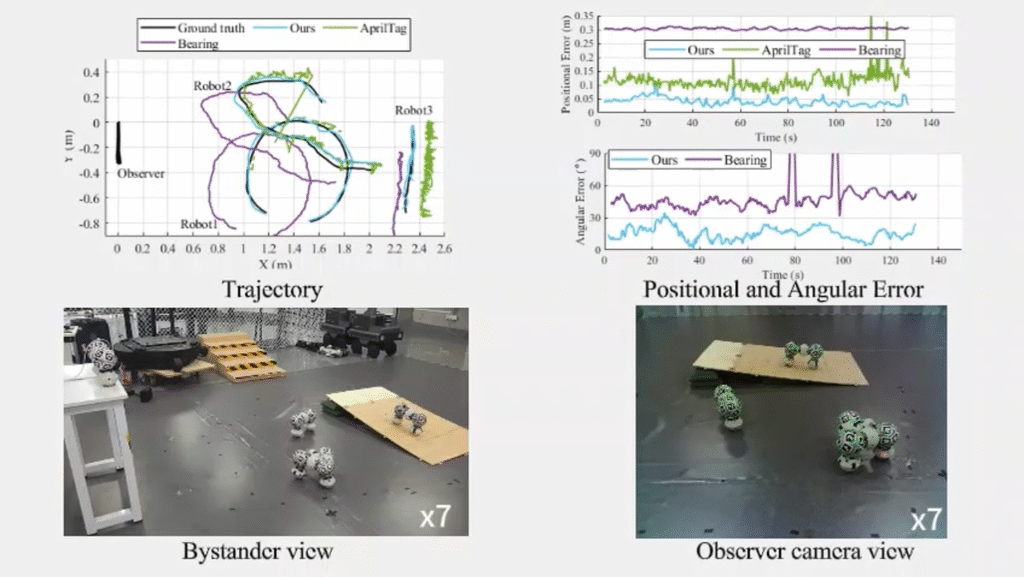

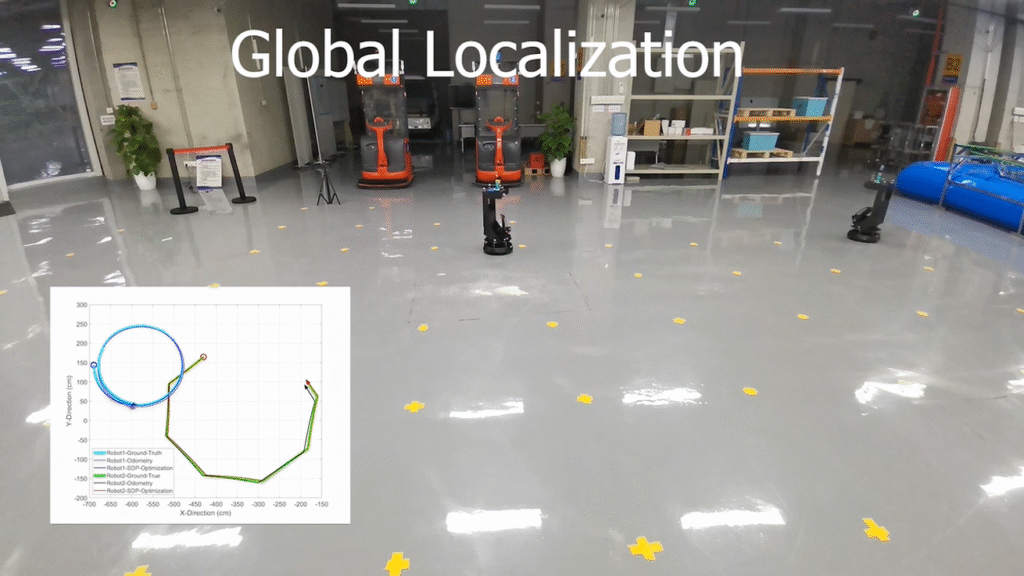

Multi-robot Self-contained Relative Localization

Recent years have seen a surge of interest in the field of multi-robot relative localization within the robotics community. It’s worth noting that robots can operate across a broad spectrum of distances, ranging from direct contact at 0mm to over 100 meters. Moreover, each robot can function independently within the workspace. Given that no single method offers sufficient accuracy across the full range, we address various segments of localization using unique approaches. In our exploration of relative localization techniques, we evaluate the utility of magnetic arrays, direct vision detection and measurement, Ultra-wideband (UWB), and visual semantic landmarks.

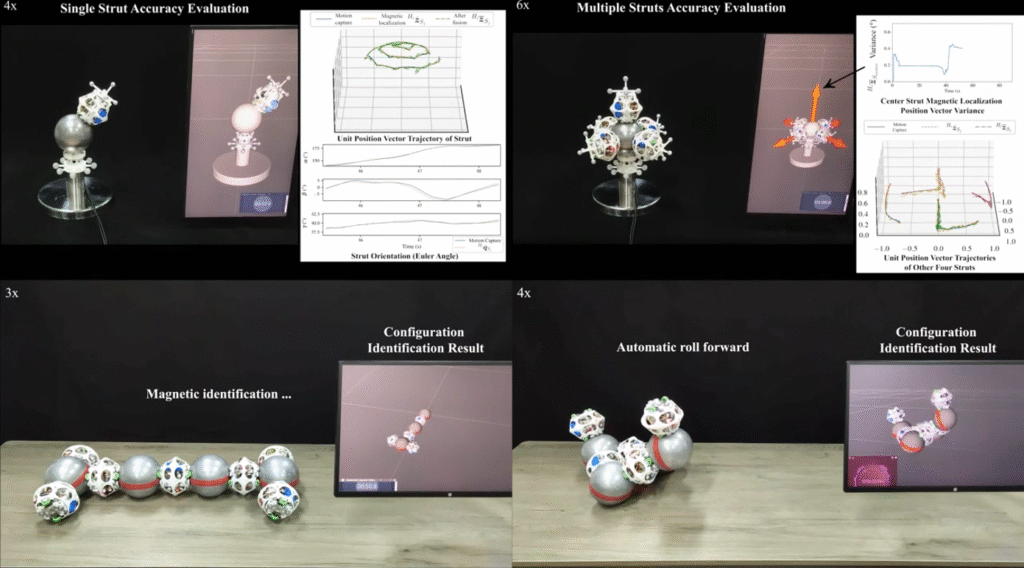

- Yuming Liu, Qiu Zheng, Yuxiao Tu, Yuan Gao, Guanqi Liang, Tin Lun Lam, “Configuration-Adaptive Visual Relative Localization for Spherical Modular Self-Reconfigurable Robots,” Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Atlanta, USA, May 19 – 23, 2025. [paper] [video] [2025 IEEE ICRA Best Conference Paper Award – Finalist]

- Yuxiao Tu, Tin Lun Lam, “Configuration Identification for a Freeform Modular Self-reconfigurable Robot – FreeSN,” IEEE Transactions on Robotics (T-RO), August 2023. [paper] [video]

- Yue Wang, Muhan Lin, Xinyi Xie, Yuan Gao, Fuqin Deng, Tin Lun Lam, “Asymptotically Efficient Estimator for Range-based Robot Relative Localization,” IEEE/ASME Transactions on Mechatronics (TMECH), June 2023. [paper] [video]

Collaborative Loco-manipulation

The collaboration between the University of Edinburgh (UoE) and Shenzhen Institute of Artificial Intelligence and Robotics for Society (AIRS) aims at fundamental and applied research in artificial intelligence and robotics. The current research focuses on three scientific pillars: Multi-Contact Planning and Control, Multi-Agent Collaborative Manipulation, and Robot Perception.

PI: Prof. Sethu Vijayakumar (UoE), Prof. Tin Lun Lam (AIRS)

More information: https://airs.cuhk.edu.cn/en/page/357

http://web.inf.ed.ac.uk/slmc/research/projects-and-grants/uoe-airs-joint-project

- Lai Wei, Yanzhe Wang, Yibo Hu, Tin Lun Lam, Yanding Wei, “Online Dual Robot-Human Collaboration Trajectory Generation by Convex Optimization,” Robotics and Computer-Integrated Manufacturing (RCIM), Volume 91, February 2025, 102850. [paper]

- Xiaoyu Zhang, Lei Yan, Tin Lun Lam, Sethu Vijayakumar, “Task-Space Decomposed Motion Planning Framework for Multi-Robot Loco-Manipulation,” Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Xian, China, May 30 – June 5, 2021. [paper] [video]

- Zhangjie Tu, Tianwei Zhang, Lei Yan, Tin Lun Lam, “Whole-Body Control for Velocity-Controlled Mobile Collaborative Robots Using Coupling Dynamic Movement Primitives,” Proceedings of the IEEE-RAS International Conference on Humanoid Robots (Humanoids), Okinawa, Japan, November 28-30, 2022. [paper] [video]

- Chongxi Meng, Tianwei Zhang, Tin Lun Lam, “Fast and Comfortable Interactive Robot-to-Human Object Handover,” Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, October 23-27, 2022. [paper] [video]

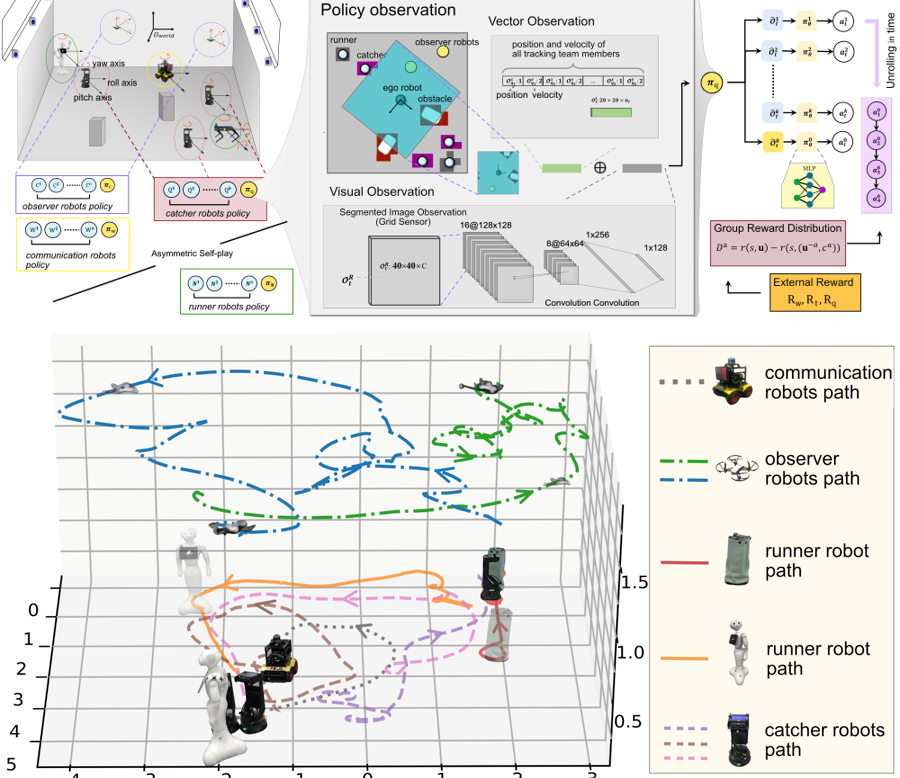

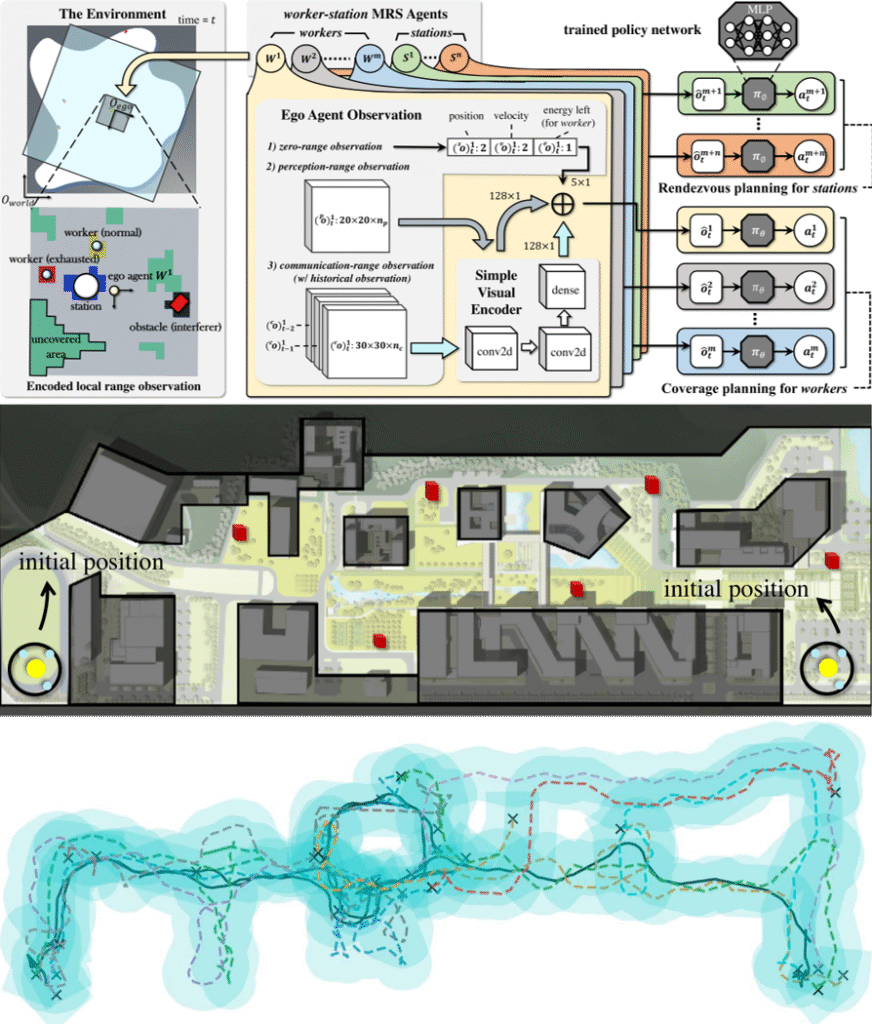

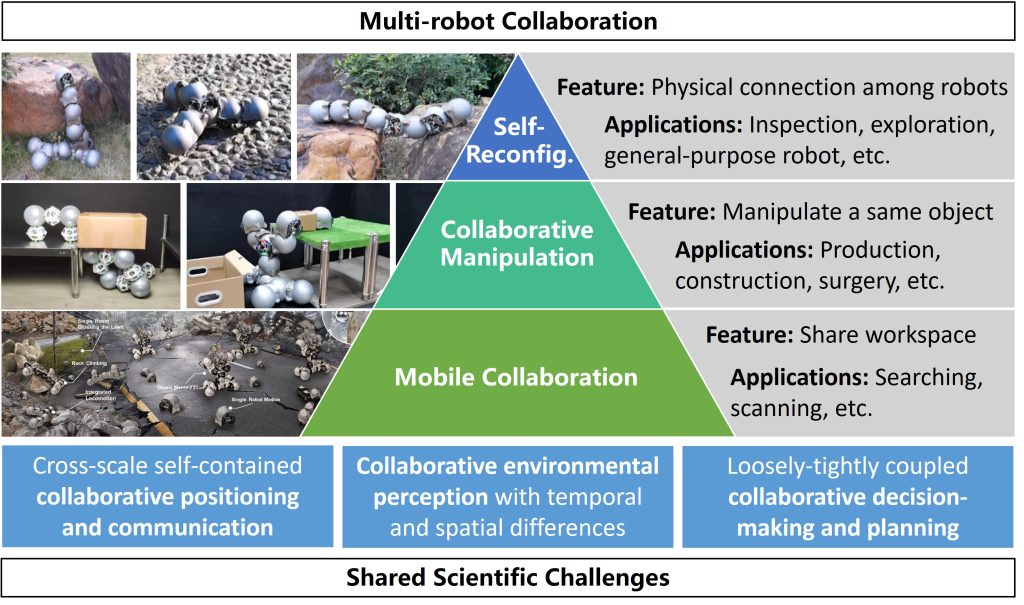

Heterogeneous Multi-robot Mobile Collaboration

A heterogeneous multi-robot cooperative system comprises a team of robots with different configurations that can cooperate to achieve complex tasks in dynamic environments. We study the problems of accurate modeling, real-time solving, and dynamic adaptation for the collaborative planning problem of heterogeneous multi-robot systems. By constructing a constrained multi-task assignment and scheduling model, we use operations research algorithms and machine learning techniques to solve and analyze specific scenario tasks. The research results provide theoretical support for using robots in complex and changing environments in reality. Future application scenarios include unmanned mines, security, anti-terrorism, dynamic path planning, human-machine co-adaptation, etc.

- Yuan Gao, Junfeng Chen, Xi Chen, Chongyang Wang, Junjie Hu, Fuqin Deng, Tin Lun Lam, “Asymmetric Self-Play-Enabled Intelligent Heterogeneous Multirobot Catching System Using Deep Multiagent Reinforcement Learning,” IEEE Transactions on Robotics (T-RO), April 2023. [paper] [video]

- Junjie Lu, Bi Zeng, Jingtao Tang, Tin Lun Lam and Junbin Wen, “TMSTC*: A Path Planning Algorithm for Minimizing Turns in Multi-robot Coverage,” IEEE Robotics and Automation Letters (RA-L), July 2023. [paper]

- Hoi-Yin Lee, Peng Zhou, Bin Zhang, Liuming Qiu, Bowen Fan, Anqing Duan, Jingtao Tang, Tin Lun Lam, and David Navarro-Alarcon, “A Distributed Dynamic Framework to Allocate Collaborative Tasks Based on Capability Matching in Heterogeneous Multi-Robot Systems,” IEEE Transactions on Cognitive and Developmental Systems (TCDS), April 2023. [paper] [video]

- Jingtao Tang, Yuan Gao, Tin Lun Lam, “Learning to Coordinate for a Worker-Station Multi-robot System in Planar Coverage Tasks,” IEEE Robotics and Automation Letters (RA-L), October 2022. [paper] [video]

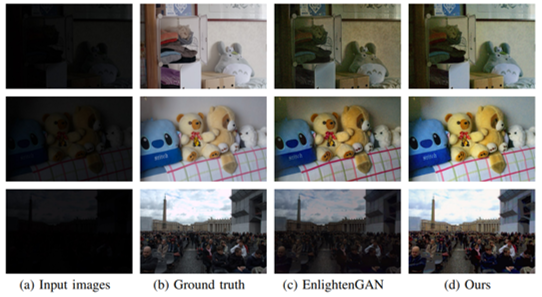

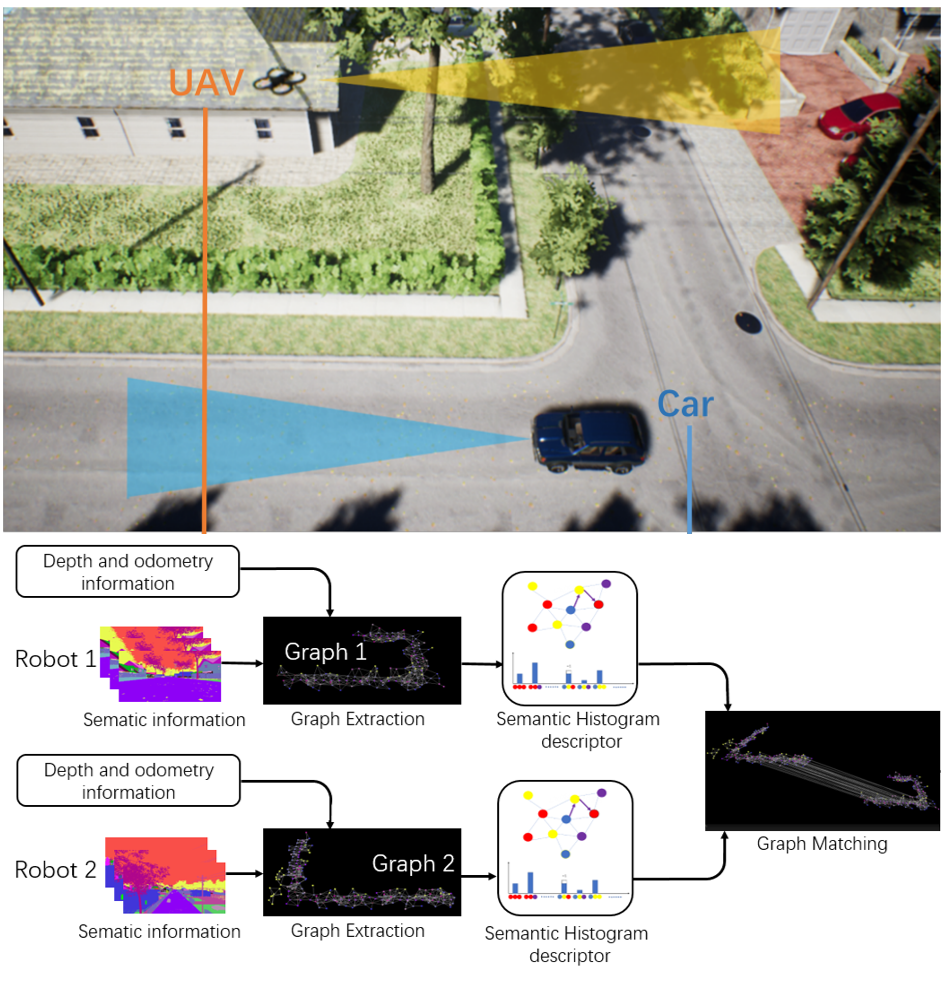

Multi-robot Environment Perception

In multi-robot systems, environment perception and data fusion from different sources are crucial for effective collaboration. This research aims to address the challenges associated with data matching and visual interference in such systems, focusing on three main issues:

- Data Matching Difficulty due to Time Differences: The difference in data collection times can lead to variations in lighting conditions, making it challenging to match and fuse data from multiple sources.

- Data Matching Difficulty due to Viewpoint Differences: The different perspectives from which robots collect data can cause inconsistencies, making it difficult to match and integrate the information for a comprehensive understanding of the environment.

- Visual Interference among Robots in the Same Environment: As multiple robots move and operate within the same environment, they may generate visual interference with each other, further complicating data matching and fusion processes.

This study aims to propose solutions to overcome these challenges and improve the overall performance of multi-robot collaborative systems in complex environments.

- Junjie Hu, Chenyou Fan, Mete Ozay, Qing Gao, Yulan Guo, Tin Lun Lam, “Robust Depth Estimation Under Sensor Degradations: A Multi-Sensor Fusion Perspective,” IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), June 2025. [paper]

- Junjie Hu, Chenyou Fan, Mete Ozay, Hua Feng, Yuan Gao, Tin Lun Lam, “Unlocking Drone Perception in Low AGL Heights: Progressive Semi-Supervised Learning for Ground-to-Aerial Perception Knowledge Transfer,” IEEE Transactions on Intelligent Transportation Systems (TITS), May 2025. [paper]

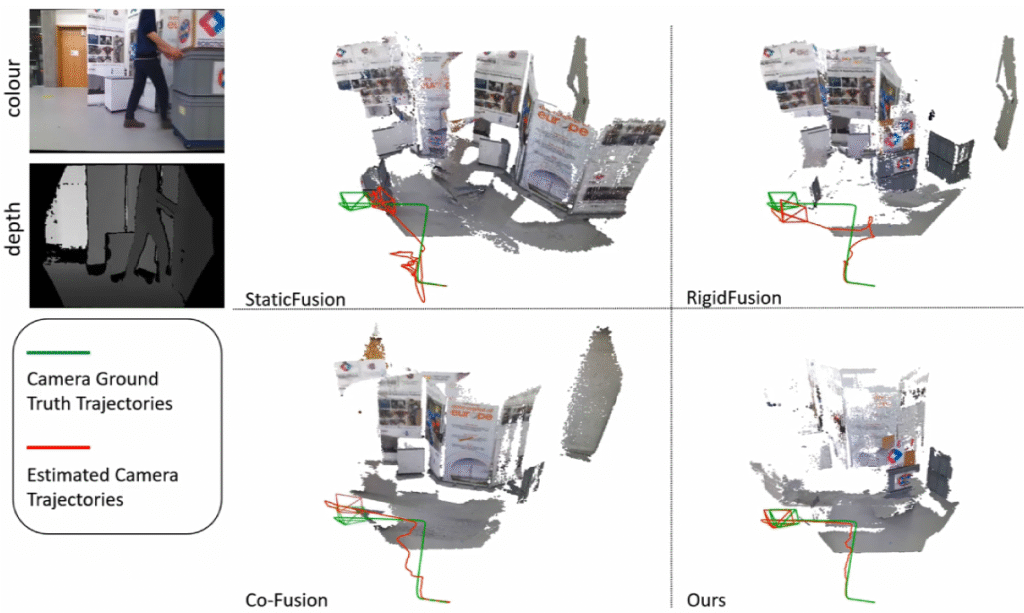

- Ran Long, Christian Rauch, Tianwei Zhang, Vladimir Ivan, Tin Lun Lam, Sethu Vijayakumar, “RGB-D SLAM in Indoor Planar Environments with Multiple Large Dynamic Objects,” IEEE Robotics and Automation Letters (RA-L), June 2022. [paper] [video]

- Xiyue Guo, Junjie Hu, Junfeng Chen, Fuqin Deng, Tin Lun Lam, “Semantic Histogram Based Graph Matching for Real-Time Multi-Robot Global Localization in Large Scale Environment,” IEEE Robotics and Automation Letters (RA-L), October 2021. [paper] [code][video]

- Junjie Hu, Xiyue Guo, Junfeng Chen, Guanqi Liang, Fuqin Deng, Tin Lun Lam, “A Two-stage Unsupervised Approach for Low light Image Enhancement, ”IEEE Robotics and Automation Letters (RA-L), October 2021. [paper][video]